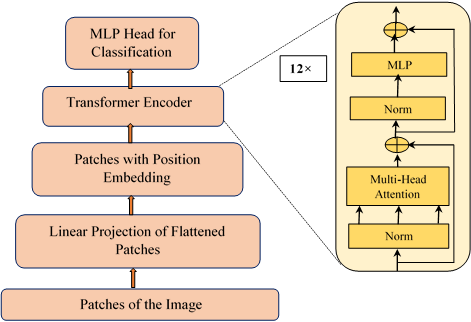

"Deep Learning models are promising solutions for most of the artificial intelligence and machine learning applications in various fields like safety and transportation, medical field, weather forecasting and many more. State-of-the- art deep neural networks can have hundreds of millions of parameters, and it makes them less than ideal for mass adoption in devices with constrained memory and power requirements like edge computing devices and mobile devices. Techniques like quantization and inducing sparsity, aims to reduce the total number of computations needed for deep learning inference. General purpose computing hardware like CPUs (Central Processing Units) and GPUs (Graphic Processing Units) are not optimized for portable embedded applications as they are not energy efficient. Field Programmable Gate Arrays (FPGAs) are suitable candidates for edge computing, which delivers decent power consumption, with flexibility. We investigate on efficient architectures that aims to make deep learning inference faster and more energy efficient and reducing the

memory-bandwidth bottleneck."